Testing Cambium Terragraph hardware

/Cambium V3000 client node on an apartment building roof

After a couple months of testing Siklu’s Terragraph hardware (see related article), we were approached by Cambium asking to run a side by side test using their solution. We have had a long working relationship with Cambium on their ePMP product line so despite running into some “testing fatigue”, we agreed to try out the V5000 distribution node and the V3000 client nodes on a site in our downtown area.

The chosen test site is:

V5000 connected via 10 Gbps fiber to a core switch and powered by 48 VDC. Site is connected to 10 Gbps fiber

Five V3000 client nodes all within 300 meters servicing a large apartment building full of college students, a food hall with free WiFi, a couple condo buildings and the city Visitors Center.

All sites are fairly heavy users, especially the apartment building with the college students. These sites were currently being connected via 4 different 60 GHz APs all at the same location as the proposed V5000 install. We had a mix of Mikrotik and Kwikbit 802.11ad products. Much like our other test site with Siklu, we were seeing our own self interference at this location due to the amount of 802.11ad radios (three others are also on this same roof but not part of this test).

V3000 client node on a condo building roof

The Cambium Terragraph solution requires an E2E controller that handles the back end work of connecting the links, sending configurations, upgrading firmware, etc. This is tied into Cambium’s cnMaestro solution. There are two options for this controller:

Run it on the V5000 distribution node

Run it as a container on an on-premise box

If you use the onboard E2E controller on a radio, you can use the web interface of that radio to control everything. Or, in either case, you can attach this to your cnMaestro instance and manage it though that site. We run cnMaestro as an on-premise server on our Proxmox box. You can also run the cloud hosted version of cnMaestro.

We initially spun up the E2E container on a Ubuntu instance on our Proxmox. This was then connected to the cnMeastro on-premise server. Once we started working with Cambium tech support on the initial configuration, they suggested we use the onboard controller on the V5000 radio. This was partially related to the fact that the radios must see this E2E controller to get programming. We were programming these radios off-line in the shop and they did not have layer 2 connectivity back to the E2E controller we set-up.

Cambium has provided a list of videos that help with the deployment of your first link. We had a call with Cambium engineers to help get our link operational - but it is not difficult to follow the video instructions. Once your first link is up, the rest become trivial.

We deployed our links with Cambiums cnWave software version 1.2-beta3. This was at the request of Cambium engineers. We normally do not run beta code on the network but as part of our testing agreement, we would run their latest code for this project. So you don’t have to skip to the end, this code branch appears to be very stable. We have not had any software issues.

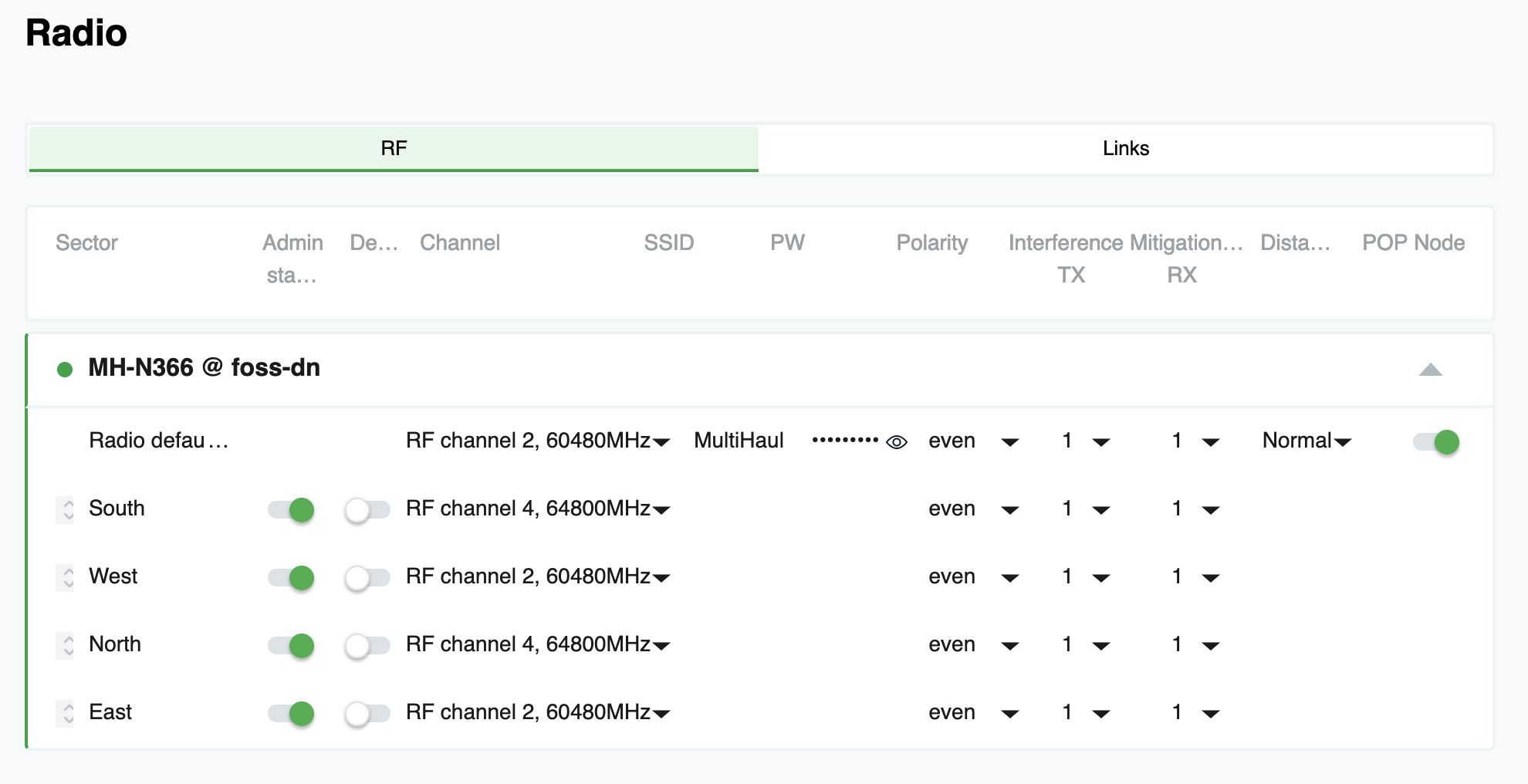

Step 1 is to configure your V5000 distribution node to run as your E2E controller and make it your “POP Node”. This is important in Terragraph world and helps set the network topology.

We then set the channels we were going to use on the two radio sectors as well as the IPv4 information for management. Yes, there is a bunch of IPv6 going on but Cambium has made this automatic. We did not touch any IPv6 settings - we let the E2E controller handle all of that for us. You can get in there and build your own IPv6 networking for this. You can use BGP on your IPv6 network. We opted to keep it simple for this test and since we are running this as a “star” configuration, we did not feel the need to customize this portion of it. We did turn on “Layer 2 bridge” mode which is important for us. This basically creates a layer 2 bridge across the IPv6 network so as far as your devices are concerned, they are on a layer 2 bridge.

Star configuration of the test network with 4 sites operational

Once the V5000 is configured and the E2E controller is running, now you add your client sites. This is where things got pretty cool.

From cnMeastro, you need to do a couple things to get your first link working:

Add a “site” to your configuration. Think of this as your building where the client radio will go.

Add a “node” to that site you just created. A node is your physical radio.

All you need for the site is a location on a map. All you need for the node is the MAC address of the radio you are going to deploy there.

Adding a node to a site in cnMaestro

Now, the final step is to build a “link” from your DN (the V5000) to your node (V3000 in our case). This link is what tells the controller this radio is authorized to connect. When you build a link, this is done in cnMaestro and you only need to tell it the A and Z end of the link. On the A end, you tell it which of the two sectors you are going to connect to on the V5000 and on the Z end, there is only one radio.

Creating a link in cnMaestro

List of existing links for this site

Once the link is built in cnMaestro, all you need to do is deploy the radio. You don’t need to touch it or log into it in advance. You can take a brand new radio out of the box and put it on a roof. As long as that radio MAC address is attached to the “node” in cnMaestro and the link is built, it will work. When the radio starts to beacon, it will see the V5000 and the E2E controller will authorize the radio to connect. Once it is connected, the controller will automatically send the configuration to the new node (IP address, VLANs, etc) and can push new firmware.

We found this process takes about 60 seconds from the time the new radio connects to the V5000 to the time is a fully programmed and able to pass traffic.

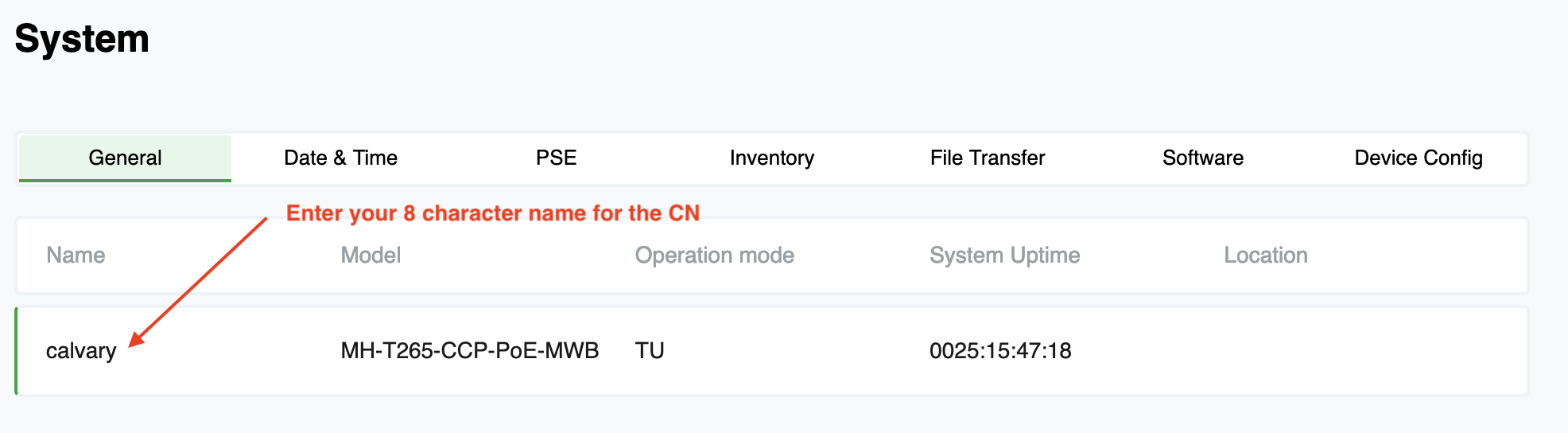

This is the part I really liked with Cambium. I never had to log into the client radio and do anything to it. In Siklu’s configuration, you need to log in and at a minimum, give the client radio a unique 8 character name. That name is what authorizes it to the distribution node. Cambium uses the MAC address for authentication and everything is pushed from the E2E controller to the new client. It allows us to program everything in the office and just hand a new radio to an installer to hang.

The Hardware

A main difference between Siklu and Cambium with the DN is the coverage. Siklu is 360 degrees of coverage with 4 90-degree sector antennas. Cambium is 280 degrees of coverage with 2 140-degree antenna sectors. That leaves ~80 degrees of no coverage on the back side of the DN.

The DN has 2 copper Ethernet ports and a SFP+ port. One of the copper ports is POE in, one has POE out and the SFP+ cage will handle up to 10 Gbps fiber modules. We are powering ours with 48 VDC into a terminal to Ethernet adapter (not POE but just power).

The DN is all metal and appears well built. It is about the size of a shoe box with the ports on the bottom and the mounting hardware on the back.

V5000

We are using the V3000 exclusively for our client ends. They make a V1000 client radio as well that is much smaller. Our clients were all 200+ meters from the DN so we opted to test the high gain antenna (44.5 dBi). Using Cambium LinkPlanner, we could easily hit five 9’s of reliability in our rain zone with this antenna.

A smaller antenna is available for the V3000 as well (40.5 dBi). It is the same exact radio, you just change the antenna that is bolted onto it.

V3000 with a 44.5 dBI antenna on it and a 40.5 dBi antenna on the floor

The precision mounting bracket for the V3000 is decent. It is a little difficult to install on the back of the V3000. The 4 bolts that hold it on are not all accessible with a wrench and you need to use a metric Allen wrench to tighten the bolts. Another tool to carry.

For fine adjustment aiming, it actually works pretty well and does not have much play in it as you tighten it down (a good thing).

You will want to invest in an aiming scope if you are doing any aiming past a couple hundred meters.

V3000 with a scope and Cambium aiming tube both installed

Cambium sells a plastic aiming tube (pictured on the right) that slides into a bracket. It works ok for short links. There is a mount on the left top side of the radio that will accept aiming scope. This scope was dead on in our experience (looking your direction IgniteNet) and made aiming super simple.

Looking through the scope. V5000 is dead center.

Performance

Well, how well does it work? One of the issues we have heard about (and experienced) with Terragraph radios is a loss of overall throughput on a sector when a second client radio is added. This is an area we really wanted to test with Cambium.

Our test set up was a OSX laptop with the speedtest.net client testing back to our own in house speedtest.net server running on a Proxmox box in the same rack as our fiber terminates from the V5000. We tested by plugging the laptop directly into the V3000 client radio’s AUX Ethernet port.

With a single client radio on the V5000 sector, we were easily able to saturate the laptops Ethernet port speed. I don’t have a test configuration that lets me test faster than a gigabit Ethernet port. Sorry. But, since we are handing off with 1 Gbps connections in most cases, this was fine for us.

V3000 test with single radio on V5000 sector

We then added additional client radios to the V5000 and tested at each install. Once the V5000 sector got its second client attached, we did see a drop in overall throughput - mostly on the download side of things. We were no longer able to saturate the Ethernet port on the laptop.

V3000 test as second client on sector

We repeated these tests over and over again. We consistently see between 850 Mbps and 900 Mbps on the download and over 900 Mbps on the upload side.

We are NOT channel bonding on the V5000. This is with a single channel per sector.

We have been very pleased with this performance. We have taken down four 802.11ad radios, eliminated our self interference and are offering faster speeds to our customers with the Cambium hardware.

Latency has also decreased. Here is a client (the large apartment building) ping test before (using Kwikbit PtMP radios) and after on the Cambium Terragraph. This is a test from PRTG pinging a CCR1009 in the apartment building every 30 seconds. You are looking at a 2-day average result graph:

Ping test across wireless link

Would we spend our own money on this hardware moving forward? Without hesitation. It is a solid addition to a growing product line in the 802.11ay space and one we have had very good experience with.

January, 2022 update

As of the first of this year, we have installed a V3000 point to point link at 1,030 meters to test that part of the product portfolio. We are able to push ~ 950 Mbps through this link in our tests. I think we are inhibited by port speed currently. It is not connected to fiber but only the copper port negotiated a 1 Gbps.

In addition, we added a second V5000 and 7 clients to the network. These were a mix of V3000 and V1000 clients.

We have had one significant snow event. We got about 5” of very light cold snow one night. It did impact a couple of our V3000’s. The point to point never went down but RSSI degraded and power increased to full on both ends. We had two client nodes bounce a couple times and one go down for an hour until wind blew the snow off. Upon inspection, most of our V3000 radios were totally covered in snow on the reflector dish. These have been jokingly called a “snow shovel” and they did in fact act like that for this storm. I am afraid of what will happen in one of our wet spring storms when the snow really sticks to things and has a much higher water content.

We also have had a V5000 fail on us and it is still in the support group at Cambium to figure out why. When we ran the software upgrade from 1.2-beta4 to 1.2, the V5000 came back up after the reboot and the sector 1 radio was not working and the V5000 would reboot about every 10 minutes. We tried for about 90 minutes to get it working including software upgrades and downgrades. We finally had to swap out the node with a spare. I will post the findings with Cambium but we have requested an RMA on that unit. It had previously been upgraded a couple times with various firmware without issue.

May, 2022 update

We have been working with Cambium testing some snow covers for these radios. After a couple big snow events, I can tell you the covers work well. We did not lose any links that had snow covers on them in our last two storms. These covers are still being tested and are not the final color or design but I can tell you Cambium is listening and working on a snow solution.

As for the V5000 that failed, Cambium reported there was a firmware issue with that one that caused a very rare event to take place. They say that has been fixed in the current 1.2.1 firmware.